At its swampUP 2023 conference, JFrog today added additional security capabilities to its continuous integration/continuous development (CI/CD) environment as well as making it possible to manage machine learning models within the context of a DevOps workflow.

The latest edition of the JFrog Software Supply Chain Platform adds an integrated static application security testing tool (SAST) to scan source code alongside the existing JFrog Xray tool for scanning binaries.

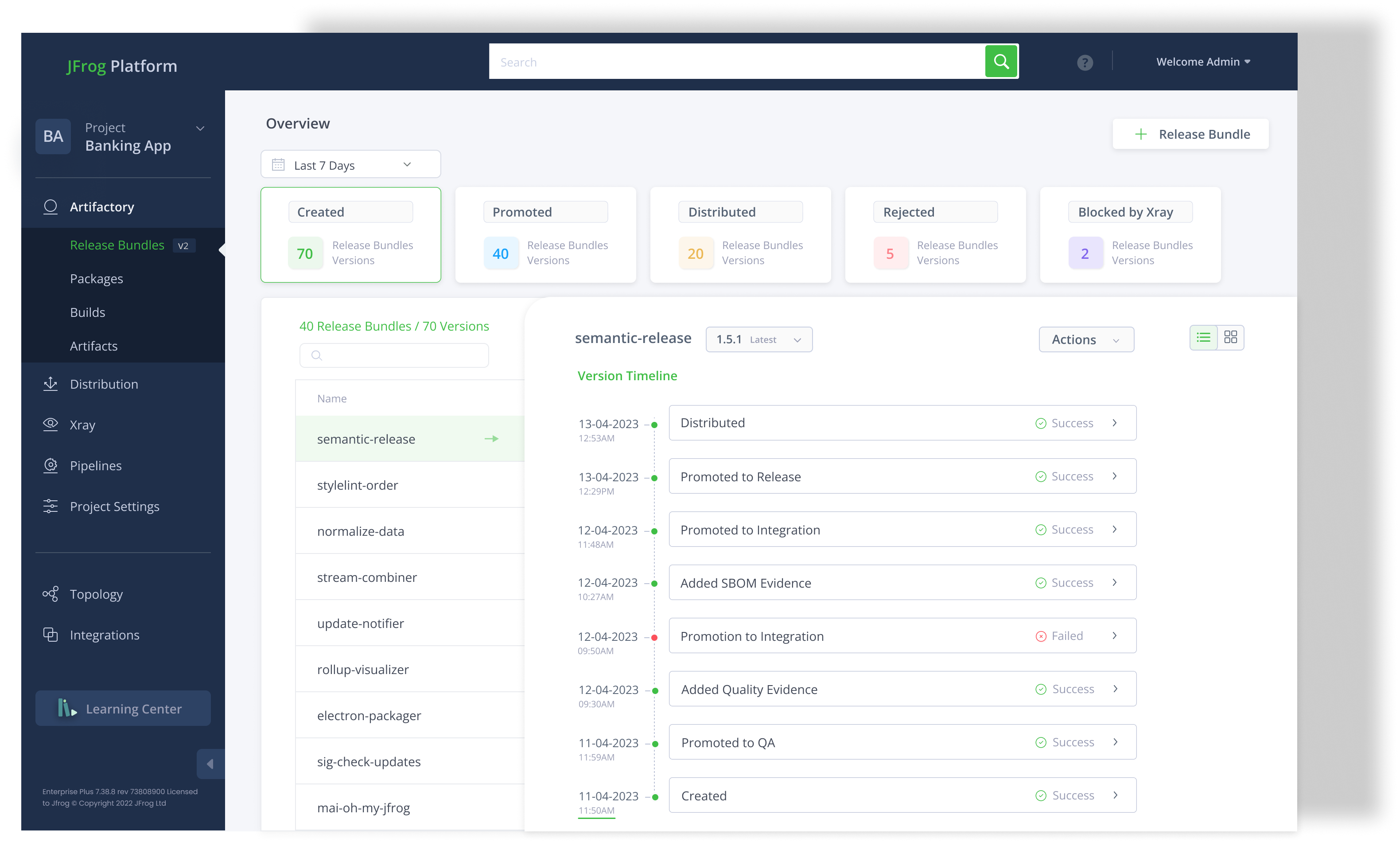

JFrog has also added an Open-Source Software (OSS) Catalog to the JFrog Curation service to make it simpler for DevOps teams to discover specific packages that have been determined to be secure. JFrog is also adding Release Lifecycle Management (RLM) capabilities that enable aggregating software artifacts into an immutable software package that serves as the single source of truth as multiple iterations of an application are developed. JFrog RLM uses anti-tampering systems, compliance checks and evidence capture to collect data and insights to ensure the immutability of signed binaries.

Finally, ML Model Management capabilities, available in beta, are being added to the platform to make it possible to store AI models, run AI models from Hugging Face, a provider of open source AI models, as a proxy in cache via an application programming interface (API) and apply DevSecOps best practices to govern, secure and ensure licensing compliance.

JFrog CTO Yoav Landman said that as DevOps teams become more familiar with AI models, it’s becoming increasingly clear they are managed like any other software artifact incorporated into a DevOps pipeline. He noted that the approach provides the added benefit of helping to ensure AI models have not been poisoned by malicious data and prompts that would deliberately cause a hallucination.

Most AI models today are created by data scientists using machine learning operations (MLOps) best practices that each organization defines. In some instances, those processes include what is known as a feature store that enables data scientists to track which components are being used at any given time. However, once a model is completed, it needs to be integrated into an application—a process that, from a DevOps perspective, is best facilitated by storing an AI model in a Git repository alongside other components that need to be managed.

It’s still early days as far as the convergence of DevOps and MLOps practices are concerned. Going forward, as it becomes apparent almost every application will be invoke some type of AI model, the need to integrate these workflows is becoming a more pressing concern.

It’s now only a matter of time before AI models become a pervasive element of the software development life cycle as they are continuously retrained and updated. It will generally fall to DevOps teams to manage the deployment and updating of those AI models in collaboration with the data science teams that train them. The immediate challenge, of course, will be to bridge the significant cultural divide between two very different types of IT professionals.