In all the hype over AI, let’s concentrate on the real value it can provide, especially for busy IT Ops teams.

2018 is already shaping up to be the year when AI and machine learning (ML) go mainstream—at least in terms of public awareness, if not yet of actual usable products. As I write this, the annual CES event—the show that is, Prince-style, “formerly known as “The International Consumer Electronics Show—is in full swing. The usual suspects are out in force, with Amazon’s Alexa getting embedded in more and more products and Google also showcasing its own consumer AI capabilities.

More unexpected AI adopters included Ferrari, but there were also some notable failed demos, with an AI-enabled suitcase repeatedly running away from its owner or AI refusing to answer questions during an on-stage demo. All of this led the BBC to question, “When will AI deliver for humans?”

What Happens in Vegas, Stays in Vegas

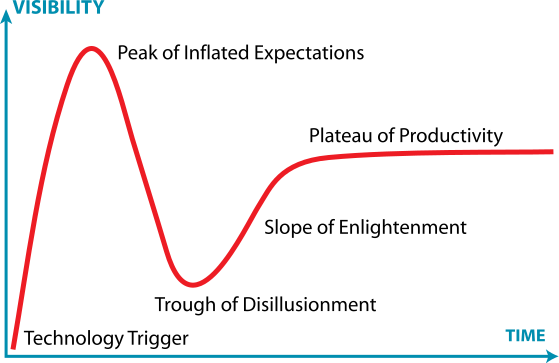

Of course, much of what comes out of CES is frivolous, but there are all sorts of other applications of AI and ML. The problem is that whenever there is this much hype, disappointment and disillusionment are not far behind. Gartner’s famous Hype Cycle model can help here.

The conventional concern is that overly optimistic projects initiated at the Peak of Inflated Expectations risk failing, leading to excessive caution and fewer projects starting during the Trough of Disillusionment, before a more realistic understanding sets in and the technology achieves true mainstream adoption. Due to the nature of AI and ML, though, there is a second failure mode that is far more insidious.

AI and ML generally operates as a black box. Results are provided, very quickly and without the need to develop deterministic rules, but it is generally not possible to determine why a specific result was generated or troubleshoot failures in detail. As these techniques are used in the real world, potential problems that might not have been obvious in the lab begin to appear. If your smart fridge misreads the expiry date on that chicken (and you don’t think to check yourself), you might be in for an uncomfortable few hours. If your smart thermostat decides for some reason that your house should be as hot as physically possible, you’re still on the hook for the heating bill.

Those are the “nice” failure modes. If a facial recognition algorithm was trained on a data set that didn’t have the right racial balance, you might wind up with some of your employees not being able to get in the door, or some of your customers not being able to unlock your device, or whatever.

It gets worse. Law enforcement has an obvious interest in facial recognition—but what if a faulty system places you at the scene of a riot? https://www.liberty-human-rights.org.uk/news/blog/misidentification-and-improvised-rules-we-lift-lid-mets-notting-hill-facial-recognition Even if you can prove your innocence, you might still have to deal with some difficult conversations with your family and your employer, and your neighbours may never forget the time you were hauled off in handcuffs.

Don’t Throw The AI Baby Out With The Hype Bathwater

So should we just forget about this AI nonsense? Far from it. In fact, I am personally convinced that AI is very much a key part of our future. It just needs to be used in the right way.

I mentioned training data above, and that is the key. AI systems need a lot of good data, and they need to be trained to make good assessments. Out there in the real world, both sides of that problem are actually very hard. Gathering enough data, making sure that the data gathered are actually representative of the problem space, dealing with privacy issues around personally-identifiable information, and formatting the data correctly for ingestion by your machine learning system are already not trivial issues. However, even assuming that you have successfully dealt with that hurdle, the initial results will not be very good or reliable. Intelligent assessment by humans who understand the domain that you are training for is key if your deep learning model is not to go off the rails.

Come Up to the IT Ops Lab

Seen in these terms, IT Ops is an ideal domain to which to apply these techniques. First of all, IT infrastructure already generates huge amounts of event data – Big Data, if you insist – and all of those events are already, by definition, formatted to be easily machine-readable. Sure, there are many different formats out there, from SNMP, via various proprietary product-specific formats, to modern REST APIs, but it turns out that computers are pretty good at translating information from one representation to another.

Once the event data are in the AI system, it can apply whatever algorithms to try to make sense of them: which events are even significant, which ones are related to each other, what other relationships might exist, and so on. When it comes to evaluating the results, again, IT Ops already has people, tools and processes working on precisely that question: is this a real issue, and if so, what to do about it — or if not, why not. This evaluation can be fed back to the learning system to improve its results for next time, iterating over time to provide ever more valuable and reliable results.

This learning can even be applied to the evaluation process itself, watching what users find most interesting and valuable, and extrapolating from that to present the most significant data first, or looking for patterns in human interaction to assemble the most effective teams to work on particular types of incident.

This is what AIOps is all about. Don’t assume that the sort of consumer-grade AI that you see at CES is all that is going on in this field. AI has huge potential to help streamline enterprise IT Ops, by presenting human specialists with actionable events, helping them collaborate more effectively, and learning and improving over time.

Once the CES hype has died down, take a good look at AIOps and start to think how it can fit into your plans for 2018.