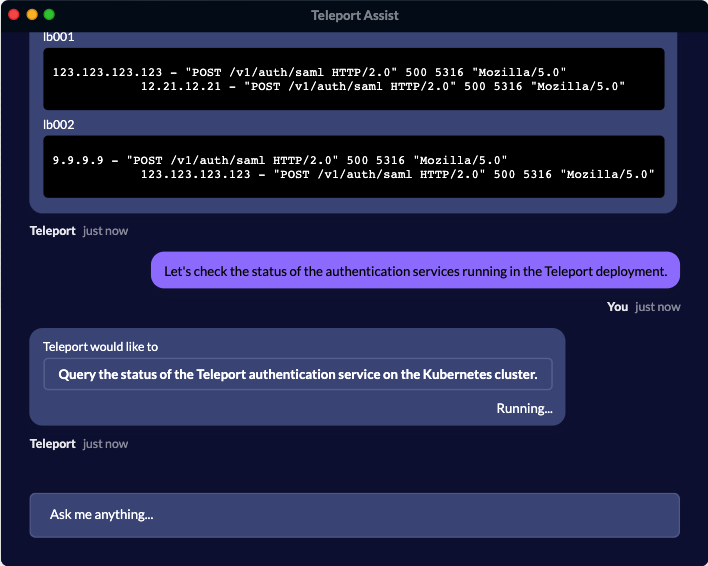

Teleport has made available a chat interface, dubbed Teleport Assist, that makes use of OpenAI’s generative artificial intelligence (AI) application programming interfaces (APIs) to enable DevOps teams to troubleshoot infrastructure issues more easily.

Using the natural language interface (NLI) provided by Teleport Assist, DevOps engineers can ask questions and give instructions in English to massive fleets of servers. Capabilities include being able to suggest commands and scripts for debugging and resolving common issues and then generating commands for review before they are applied.

In future versions of Teleport Assist, support for other cloud resources, such as SQL databases and Kubernetes clusters, will be added. Teleport Assist currently supports Secure Shell (SSH) and bash scripts, with support for additional languages and interfaces to be added over time.

Teleport CEO Ev Kontsevoy said it’s already clear that many of the scripts that DevOps engineers rely on today for IT management will be replaced by generative AI platforms. The average IT administrator will be able to invoke DevOps platforms via an NLI that eliminates the need for extensive programming expertise, he added.

In effect, the NLI surfaced by generative AI platforms will serve to democratize DevOps expertise, Kontsevoy noted.

It’s still early days as far as the adoption of generative AI by DevOps engineers is concerned, but it’s already apparent that its impact will be profound. It will increase the pace at which applications are built while making it easier for software engineers to manage large codebases. Most of the generative AI advances thus far improve developer productivity, but tools such as Teleport Assist show how generative AI will also make it easier for more organizations to adopt DevOps best practices that previously were limited to organizations that could afford to hire and retain significant numbers of software engineers.

At their core, generative AI platforms are fundamentally changing the way humans interact with machines. Instead of requiring a developer or engineer to create a level of abstraction to communicate with a machine, it’s now possible for machines to understand the language humans use to communicate with each other. A a natural language interface will soon enable DevOps engineers to ask generative AI platforms to not only surface suggestions but also debug applications and even write code. The accuracy and quality of those results will vary depending on how the large language models (LLMs) that trained those platforms were, but over time there will be a wide range of LLMs that are trained to be used within specific domains such as DevOps.

The issue is determining how to organize DevOps teams as more tasks become automated. Generative AI doesn’t replace the need for IT professionals, but it does make it simpler to manage IT at unprecedented levels of scale. In fact, at this point, the proverbial generative AI genie is out of the bottle, so there is no going back. The only thing left to decide is how quickly generative AI becomes pervasively applied.