Zerve today expanded its platform for visualizing complex IT infrastructure to include multiple generative artificial intelligence (AI) agents capable of planning, provisioning and building IT infrastructure, including the data workflows that developers need to drive applications.

Announced at the Open Data Science Conference (ODSC), the core Zerve Operating System has also been updated to include a distributed computing engine, dubbed Fleet, that enables via single command massively parallel code execution using serverless technology, invoked with a single command.

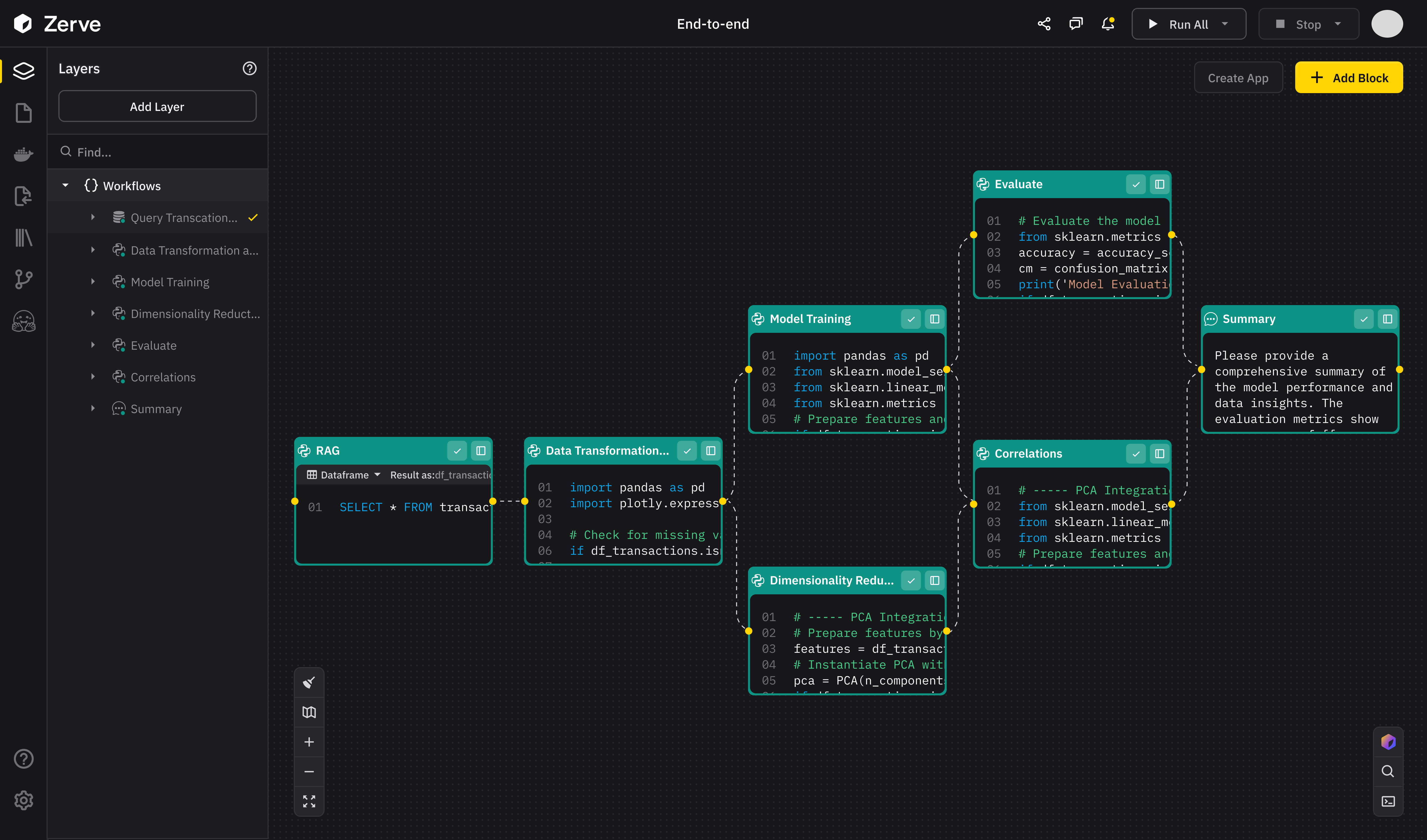

Designed to be hosted in an on-premises IT environment, the Zerve Operating System, AI agents that Zerve has added are capable of presenting a plan and then generating Zerve canvases by creating and connecting code blocks, writing code, orchestrating infrastructure and automating various parts of the data workflow as needed. If the agents encounter an error or their experiment fails, they will automatically try a different approach.

Zerve CEO Phily Hayes said the overall goal is to provide DevOps teams with a set of AI agents that, via a natural language interface,e can automate many of the manual tasks that previously made provisioning IT infrastructure tedious.

In combination with Fleet, it also becomes possible to more efficiently make multiple calls to large language models, he noted.

It’s not clear how widely AI is being applied to DevOps workflows, but a Futurum Research survey finds 41% of respondents now expect generative AI tools and platforms will be used to generate, review and test code.

In general. AI agents are leveraging the reasoning engines built into large language models (LLMs) to not only determine what line of code or word should come next in a sequence, but also to determine what task should be performed next. The degree to which those tasks can be automated depends on the amount of memory that the LLM and AI agent can provide.

Ultimately, the goal is not to replace software engineers but rather augment with a set of AI agents that make it possible to manage complex IT infrastructure at much higher levels of scale, said Hayes.

At this juncture, there is little doubt that AI agents will be incorporated into DevOps workflows, but each organization will need to determine what level of trust to have in them. Given the probabilistic nature of LLMs, it’s unlikely an AI agent is going to perform the same task exactly the same way every time. However, there is a wide range of DevOps tasks where it may not be especially critical to understand exactly how resources were provided, so much as it is to ensure there was an acceptable outcome.

In other scenarios, however, the way IT infrastructure is provisioned may need to be very precise. While an AI agent can make that task less tedious, software engineers will still need to review those configurations.

Hopefully, AI agents will make being a software engineer more rewarding, in the sense that less drudgery will be experienced. The challenge is determining when and how to onboard AI agents into a DevOps workflow that DevOps teams continue to manage and orchestrate.