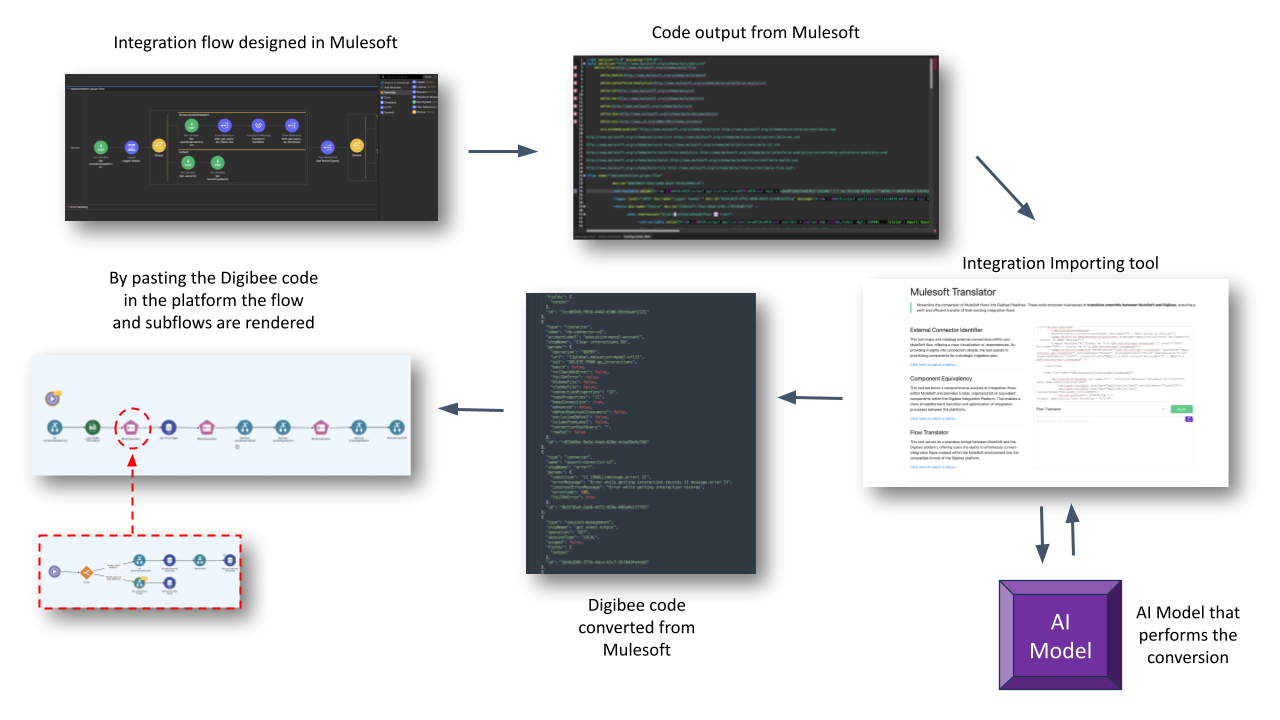

Digibee is leveraging artificial intelligence (AI) to make it simpler to migrate to its integrated platform-as-a-service (iPaaS) environment by converting code into a JavaScript Object Notation (JSON) format.

A translator tool created by Digibee invokes large language models (LLM) to convert that code into a JSON file that can then run on the Digibee Integration Platform.

Initially, that capability is being made available to facilitate migrations from the Mulesoft integration platform made available by Salesforce, with support for other legacy integration platforms planned for 2024.

Digibee CTO Peter Kreslins Junior said this approach reduced the level of time and effort required to switch platforms by using generative AI to map and refactor legacy integration code. That refactoring process doesn’t completely automate the reverse engineering of workflows, but it does substantially reduce the total cost of switching platforms by, for example, automating data migration and simplifying the transfer of configurations, he noted.

Generative AI also eliminates the need to rely on documentation or maps that either may be limited or non-existent, added Kreslins. Instead, all the integration patterns used can now be automatically discovered by an LLM, he added.

IT teams will still need to vet and test the code created by the translator tool, but overall, the speed at which any migration effort is made should be accelerated, thanks to the rise of generative AI, in 2024. Many organizations are locked into various platforms today simply because the effort required to rework the code that has been created to extend them has required either too much effort on their part or the contracting of expensive consultants. A migration project that might have taken a year or more can now be accomplished in a few days, said Kreslins. The overall goal is to reduce the current level of pain IT teams experience when migrating between platforms, he added.

It’s not clear if the rise of generative AI platforms that make converting code into other formats and languages simpler will drive a wave of migrations, but there are many organizations relying on legacy platforms that are not nearly as easy to use as more modern alternatives. As the time and effort required to make those transitions is reduced, more IT teams will be, at the very least, more open to considering their platform options. The providers of those legacy platforms will naturally have to invest in new capabilities much faster to discourage customers from migrating to another platform.

In the meantime, the amount of code that will need to be integrated is about to exponentially increase as developers rely more on LLMs to generate code. The downstream implications of that increased pace of application development for DevOps teams are profound. It’s not clear to what degree those teams can increase the level of scale at which they today manage application deployments.

The one thing that is clear is there is no going back because, so far as application development and deployment are concerned, the proverbial AI genie is out of the bottle.